Chapter.02/07

History of Big Data

History of Big Data

Chapter 02/07

History of Big Data

Snapshot

Learn about the history of big data and data analytics. Discover key events and milestones that helped shape big data, from the gathering and processing of 19th-century census data to early advancements in data technology.

Key Terms:

- Tabulating machine

- von Neumann architecture

- Transistor

- Integrated circuits

- Moore’s Law

- Information overload

- Big Data

- Internet of things

- Artificial intelligence

- Distributed computing

Since the beginning of recorded history, cultures have collected, stored and analyzed data to bring order to their civilizations. view citation[1]

Data collection and processing helped early societies organize and understand disconnected and sometimes complicated sets of information. A census is one example of this extensive gathering and processing of data.

Census Data From Ancient Babylon to the 19th Century

Census Data Gathering and Processing

Beginning with the ancient Babylonians, governments have used census data to plan food supplies, estimate workforce and army sizes, document land ownership and levy taxes. view citation[2] In 1086, English officials completed a massive land survey and recorded their findings in a 400-page manuscript called the Domesday Book. An extensive collection, Domesday Book includes records on 13,418 places, listing landholders, their tenants, the amount of land they owned, the number of people living on the land, and the buildings and natural resources present on the land. The entire process took more than a year. view citation[3]

Historians working on the 1086 Domesday Book

By the end of the 19th century, the United States census, recorded every 10 years, had become a major technical challenge for the government. The 1880 census took seven years to process, and with the nation’s rapidly growing population, officials estimated it would take even longer to complete the 1890 census. view citation[4]

A Quicker Way To Process Census Data

Mechanical engineer Herman Hollerith offered a solution for the logjam. Hollerith invented a tabulating machine that could count and record data using punch cards. Each question on the census form was assigned a particular location on the punch card, which could then be easily “read” and recorded by the tabulating machine. If the card had a hole punched in the location for marital status, that indicated that the person was married. If a hole wasn’t punched in that location, the person was single. view citation[5]

A woman with a Hollerith pantograph punch. The keyboard is for the 1920 US Census population card.

Officials had estimated that the 1890 census would take 10 years to complete, but with Hollerith’s tabulating machine, they processed the census in just 18 months. view citation[6]

The Advent of Computers

Hollerith’s tabulating machine laid the groundwork for faster and simpler collection, recording and processing of census data.

Hollerith’s tabulating machine laid the groundwork for faster and simpler collection, recording and processing of census data. However, by the middle of the 20th century, his invention was surpassed by programmable computers capable of performing 20,000 operations per second. view citation[7] Those computers were based on a design created in 1945 by mathematician John von Neumann. The von Neumann architecture allowed computers to achieve unprecedented speed and flexibility by integrating their programming instructions internally in a central processing unit (CPU). view citation[8] Many of the components from the von Neumann architecture are still used in today’s modern computers, including the control unit, CPU, internal memory, and inputs and outputs. view citation[9]

The Silicon Revolution

A replica of the first transistor, invented at Bell Labs, December 23, 1947.

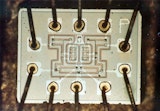

In 1948, Bell Laboratory introduced the transistor, a solid-state switch that could be triggered by electrical signals. view citation[10] Transistors took the place of the less reliable vacuum tubes used in earlier computers, which were hundreds of times larger and required their own power generator. The transistor was followed in 1958 by the development of the integrated circuit view citation[11] , an array of electronic components and transistors etched onto a wafer-thin piece of material. This material, usually silicon, is called a semiconductor because it conducts electricity under some conditions but not others, making it a good medium for controlling the flow of electricity to transistors.

Logical NOR integrated circuit from the computer that controlled the Apollo spacecraft.

Welcome to Information Overload

Computers use integrated circuits, or microchips, in their processors and memory units to convert electrical signals into instructions. Since the introduction of the microchip, computer scientists have constantly been working to reduce chip size while improving their performance. This led Intel co-founder Gordon Moore to predict in 1965 that chip performance would double every two years—a prediction that has proven so accurate it is now called Moore’s Law view citation[12] , although that rate of increase may slow in the coming decades.

Denning warned that data was being generated so fast, it would soon “overwhelm our networks, storage devices and retrieval systems, as well as the human capacity for comprehension.”

As computer processing power increased exponentially, scientists began looking for ways to harness this power to cope with the growing tide of data, or information overload, swamping many segments of society toward the end of the 20th century. Computer scientist Peter J. Denning warned that data was being generated so fast, it would soon “overwhelm our networks, storage devices and retrieval systems, as well as the human capacity for comprehension.” view citation[13]

In 1997 NASA researchers Michael Cox and David Ellsworth became the first to use the term “big data” to describe this phenomenon. view citation[14] By then, the internet had become a reality, opening the floodgate of data that would connect people around the globe and transform modern society. In 1999, Kevin Ashton recognized that the prevalence of networked sensors in industry supply chains and public spaces was creating what he termed the internet of things (IoT), a new and growing source of digital traffic. view citation[15]

The 2000s and Beyond

In the 2000s, as the volume of digital storage surpassed analog storage, view citation[16] researchers began searching for advances in computer technology to help meet the challenges of big data. By 2008, distributed computing had become one widely adopted solution. view citation[17] Distributed computing is the use of remotely connected computer servers to store and process large data sets. In recent years, advances in artificial intelligence and machine learning have driven the development of new technologies that leverage big data, view citation[18] including chatbots that interact with web visitors in real time and personal home assistants such as Google Home and Amazon Echo.

Today, the challenges of big data involve more than just the amount of information generated every day; they also arise from the variety, complexity and speed of the data stream.

Correct!

Incorrect

The Internet of ThingsBig Data Through The Years

Learn more about big data’s growth and impact by exploring these important milestones and key events in the history of big data.

1889: Census crisis

Faced with a 25 percent increase in the U.S. population in the 1880s, officials with the U.S. Census Bureau realize it will take more than 10 years to tabulate the 1890 census. Herman Hollerith’s tabulating machine solves the problem by using punch cards to speed the count.

1944: Information explosion

Fremont Rider, a librarian at Wesleyan University, publishes a book titled The Scholar and the Future of the Research Library in which he estimates that university libraries are doubling in size every 16 years with no end in sight. view citation[19]

1946: An early programmable computer

The Electronic Numerical Integrator and Computer (ENIAC) is dedicated at the University of Pennsylvania. One of the first programmable electronic computers, ENIAC weighs 30 tons and draws so much power that lights dim across Philadelphia when it is turned on. view citation[20]

1970: Relational database

Edgar F. Codd, a mathematician at IBM’s San Jose Research Laboratory, develops the relational database, an innovative system for organizing data into tables with columns and rows that permit easy access and sorting. Analysts can use a language called SQL, the structured query language, to write reports based on the data. The relational database makes it possible to analyze large amounts of structured data. view citation[21]

1975: The personal computer

The invention of the solid-state microchip in the 1950s leads to the development of the first personal computers in the 1970s. In 1975 IBM introduces the IBM 5100, complete with a built-in monitor, internal memory and a magnetic tape drive. Apple introduces the Apple II, one of the most successful mass-produced microcomputers of the era, in 1977. Annual sales of PCs peak at 362 million units in 2011. view citation[22]

1989: World Wide Web

Tim Berners-Lee, a British computer scientist, publishes a proposal for an information network that would become the World Wide Web. By 1998 there are 750,000 commercial sites on the Web, including e-commerce giants Amazon, eBay and Netflix. view citation[23]

1997: Social media revolution

The internet sees the launch of some of the first social media platforms, including Six Degrees in 1997, Blogger in 1999, Friendster in 2002, Myspace in 2003, Facebook in 2004 and Twitter in 2006. Social media traffic explodes as billions of internet users connect via personal computers, tablets and smartphones over the coming decades. view citation[24]

1999: Internet of things

The term internet of things (IoT) is coined by British entrepreneur Kevin Ashton, who notes that digital sensors such as radio frequency identification tags are revolutionizing the tracking of products in the global supply chain. view citation[25]

2000: The problem of big data

Scientists report that the world’s annual production of print, film, optical and magnetic content would fill about 1.5 billion gigabytes of storage. Peter Lyman and Hal R. Varian at the University of California, Berkeley, write: “It is clear that we are all drowning in a sea of information. The challenge is to learn to swim in that sea, rather than drown in it.” view citation[26]

2001: The big Vs

Gartner analyst Doug Laney identifies volume, velocity and variety as the primary dimensions of big data. view citation[27]

2002: Digital surpasses analog

The amount of data being stored as digital files surpasses the amount being stored in analog formats. view citation[28]

2004: Distributed computing

Google publishes a paper describing a distributed storage system, called BigTable, for handling big data. Apache Software launches its own distributed computing solution called Hadoop in 2008. Distributed computing makes it possible to run NoSQL databases that are faster and easier to scale than relational databases. view citation[29]

2005: Media explosion

The total media supply to homes in the United States is 900,000 minutes per day, up from only 50,000 minutes per day in 1960. view citation[30]

2011: Storage capacity increases

The journal Science estimates that the world’s capacity to store information grew at an annual rate of 25 percent a year between 1987 and 2007. view citation[31]

2014: IoT in the billions

According to Gartner, the IoT now includes 3.7 billion connected devices. view citation[32]

2015: Smart cities on the rise

More than 1.1 billion smart devices are in use in smart cities, including smart LED lighting, health care monitors, smart locks and various networked sensors to monitor air pollution, traffic and other activities. view citation[33]

2015: Hello, Alexa

Amazon releases its intelligent personal home assistant, Amazon Echo, a smart speaker that can play music on demand, answer questions and provide news, traffic and weather reports. The device is able to acquire new skills from a library of programs. view citation[34]

2020: Exponential growth

Digital data generation is expected to increase 4,300% per year by 2020. view citation[35]

Correct!

Incorrect

The Promise of Big DataReferences

-

“A brief history of big data everyone should read.” World Economic Forum. February 2015. View Source

-

“A brief history of big data everyone should read.” World Economic Forum. February 2015. View Source

-

“Frequently Asked Questions.” The Domesday Book Online. View Source

-

“Big Data and the History of Information Storage.” Winshuttle. View Source

-

“Tabulation and Processing.” U.S. Census Bureau. View Source

-

“A Brief History of Data Analysis.” FlyData. March 2015. View Source

-

“1960 Census: Chapter 3: Electronic Data Processing.” U.S. Census Bureau. https://www2.census.gov/prod2/decennial/documents/04201039ch3.pdf

-

“Computer Organization | Von Neumann architecture.” GeeksForGeeks. View Source

-

“Computer Organization | Von Neumann architecture.” GeeksForGeeks. View Source

-

“Computer History 101: The Development Of The PC.” Tom’s Hardware. August 2011. View Source

-

“Computer History 101: The Development Of The PC.” Tom’s Hardware. August 2011. View Source

-

“The Age of Analytics: Competing in a Data-Driven World.” McKinsey Global Institute. December 2016. View Source

-

“A Very Short History Of Big Data.” Forbes. May 2013. View Source

-

“A Very Short History Of Big Data.” Forbes. May 2013. View Source

-

“Internet of Things: A Review on Technologies, Architecture, Challenges, Applications, Future Trends.” International Journal of Computer Network and Information Security. 2017. View Source

-

“A Very Short History Of Big Data.” Forbes. May 2013. View Source

-

“Interview with James Kobielus, Big Data Evangelist, IBM.” OnlineEducation.com. View Source

-

“Big data: The next frontier for innovation, competition, and productivity.” McKinsey Digital. May 2013. View Source

-

“Big Data and the History of Information Storage.” Winshuttle. View Source

-

“70th Anniversary: ENIAC Contract Signed.” The National WWII Museum. May 2013. View Source

-

“Big Data and the History of Information Storage.” Winshuttle. View Source

-

“The rise and fall of the PC in one chart.” MarketWatch. April 2016. View Source

-

“History of the World Wide Web.” NetHistory. View Source

-

“The History of Social Media [Infographic].” SocialMediaToday. April 2018. View Source

-

“Internet of Things: A Review on Technologies, Architecture, Challenges, Applications, Future Trends.” International Journal of Computer Network and Information Security. 2017. View Source

-

“How Much Information?” School of Information Management and Systems, University of California at Berkeley. 2000. View Source

-

“3D Data Management: Controlling Data Volume, Velocity and Variety.” Gartner. 2001.

-

“A Very Short History Of Big Data.” Forbes. May 2013. View Source

-

“Bigtable: A Distributed Storage System for Structured Data.” Google. 2006. View Source

-

“A Very Short History Of Big Data.” Forbes. May 2013. View Source

-

“Big data: The next frontier for innovation, competition, and productivity.” McKinsey Digital. May 2013. View Source

-

“8 ways the Internet of things will change the way we live and work.” The Globe and Mail. View Source

-

“Smart Cities Will Use 1.1 Billion Connected Devices in 2015.” PubNub. April 2015. View Source

-

“Amazon Echo.” Wikipedia. View Source

-

“5 Ways Big Data Will Change Sales and Marketing in 2015.” Inc. January 2015. View Source

Next Section

The Technologies Behind Big Data Analytics

Chapter 03 of 07

Discover how big data works, with a focus on storing, aggregating, combining and analyzing collected data.